Akash Srivastava

I am the Director and Chief Architect of Core AI at IBM, where I lead AgentOps—a platform for building, evaluating, and optimizing agentic AI systems.

I am a Principal Investigator at the MIT-IBM Watson AI Lab, where I led the post-training team for Granite. I also founded the Red Hat AI Innovation Team (now in advisory role), where we created InstructLab—an open-source framework for LLM customization.

I obtained my PhD at the University of Edinburgh, where I worked with Prof Charles Sutton and Prof Michael U. Gutmann on variational inference and generative models.

Research

My research interests include agentic AI systems, inference-time scaling and reasoning, post-training and model customization, and generative modeling.

Selected Press

All media coverage

Publications

Inference-Time Scaling, Sampling, & Probabilistic Inference (LLMs)

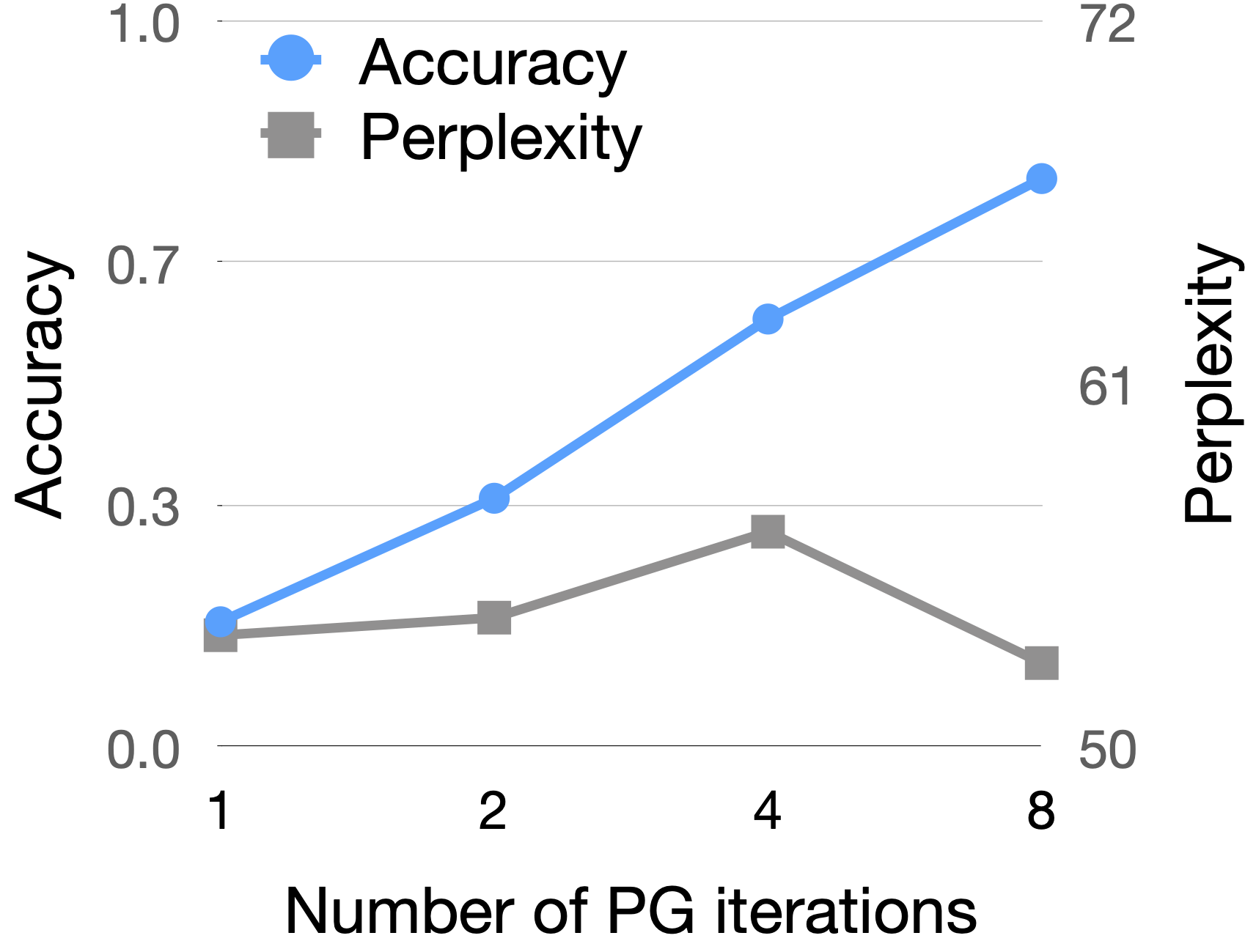

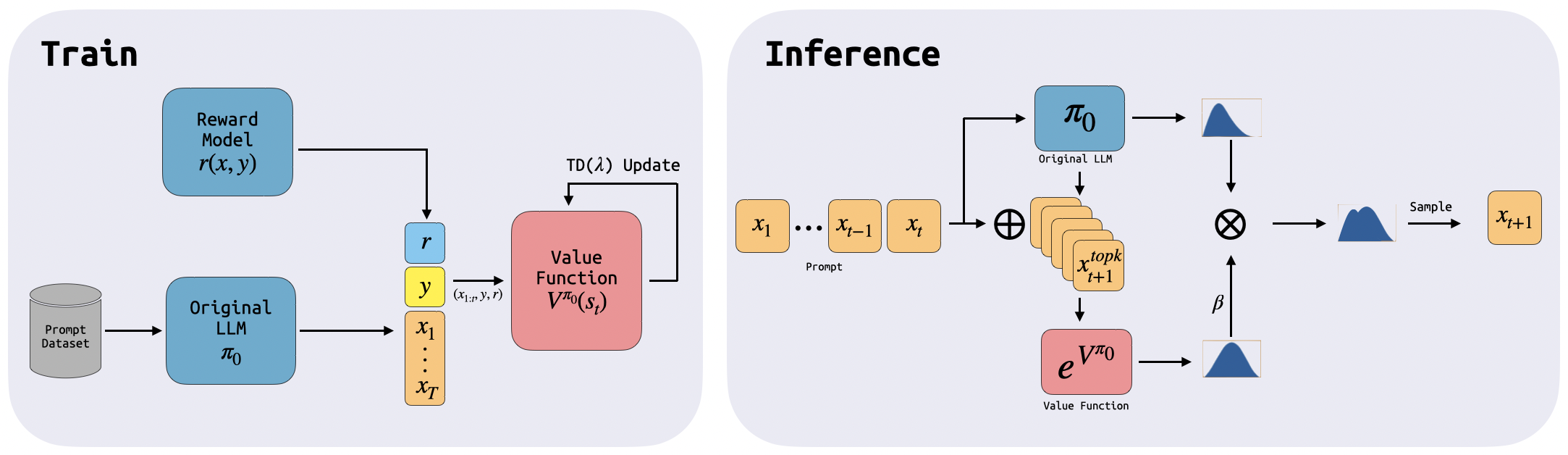

Giorgio Giannone, Guangxuan Xu, Nikhil Shivakumar Nayak, Rohan Mahesh Awhad, Shivchander Sudalairaj, Kai Xu, Akash Srivastava

arXiv preprint, 2025

Isha Puri, Shivchander Sudalairaj, Guangxuan Xu, Kai Xu, Akash Srivastava

arXiv preprint, 2025

Meihua Dang, Jiaqi Han, Minkai Xu, Kai Xu, Akash Srivastava, Stefano Ermon

arXiv preprint, 2025

Kai Xu, Akash Srivastava, Charles Sutton

ICML, 2019

Alignment, Preference Learning, & Human Feedback

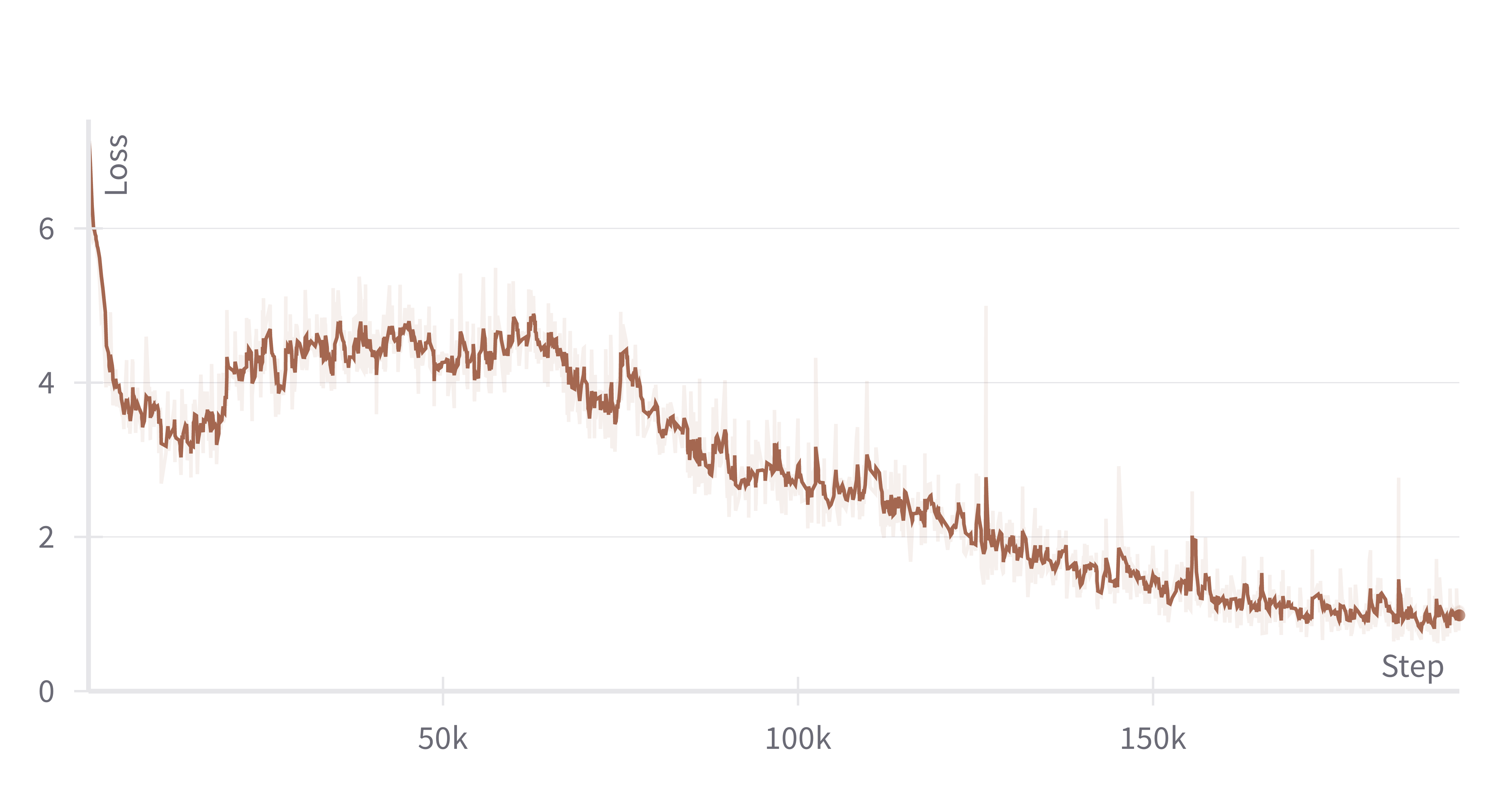

Shivchander Sudalairaj, Abhishek Bhandwaldar, Aldo Pareja, Kai Xu, David D. Cox, Akash Srivastava

arXiv preprint, 2024

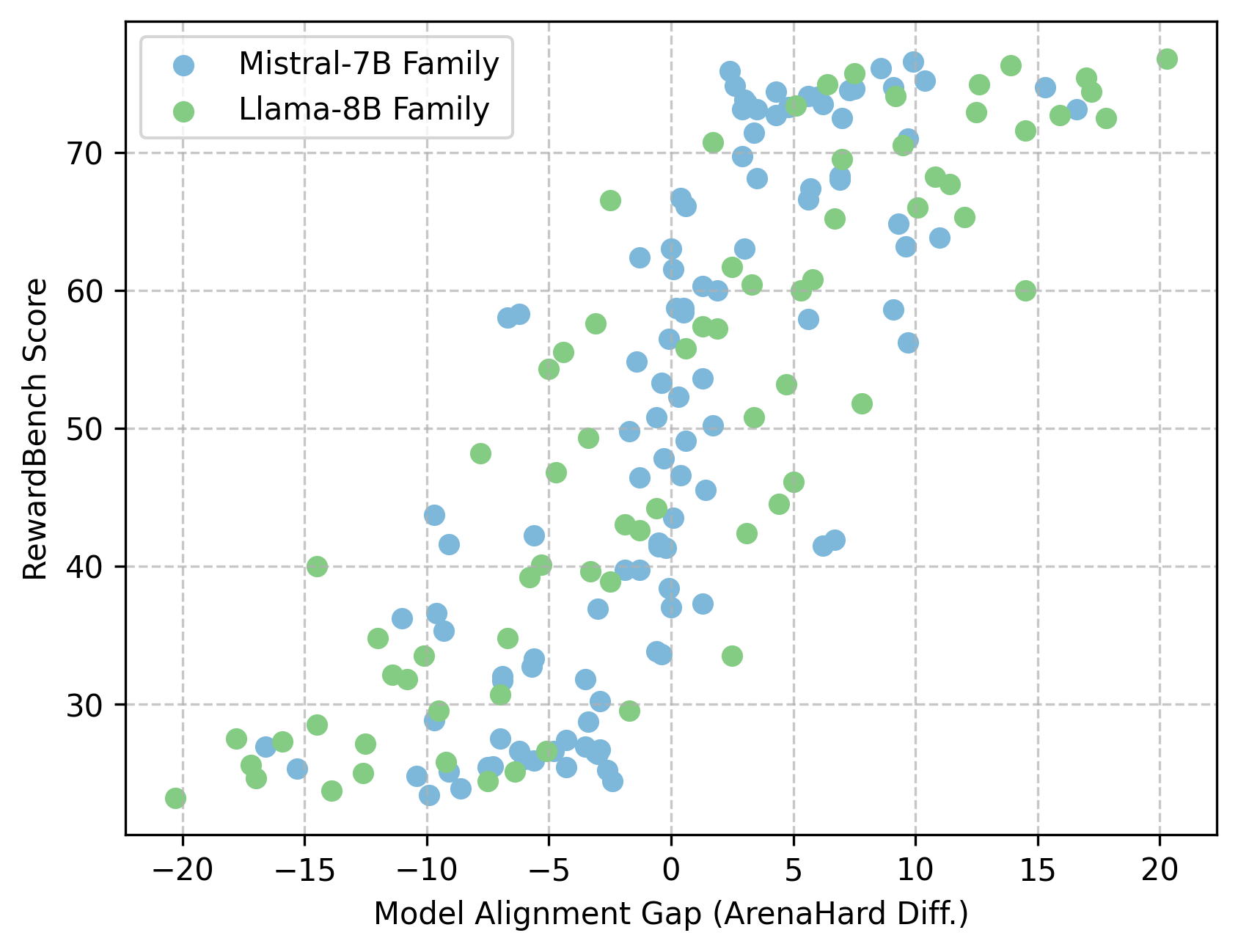

Guangxuan Xu, Kai Xu, Shivchander Sudalairaj, Hao Wang, Akash Srivastava

arXiv preprint, 2024

Seungwook Han, Idan Shenfeld, Akash Srivastava, Yoon Kim, Pulkit Agrawal

arXiv preprint, 2024

Hao Wang, Shivchander Sudalairaj, John Henning, Kristjan Greenewald, Akash Srivastava

NeurIPS, 2023

Model Merging, Fine-Tuning, & Parameter Efficiency

Amin Heyrani Nobari, Kaveh Alim, Ali ArjomandBigdeli, Akash Srivastava, Faez Ahmed, Navid Azizan

arXiv preprint, 2025

Nikhil Shivakumar Nayak, Krishnateja Killamsetty, Ligong Han, Abhishek Bhandwaldar, Prateek Chanda, Kai Xu, Hao Wang, Aldo Pareja, Oleg Silkin, Mustafa Eyceoz, Akash Srivastava, et al.

arXiv preprint, 2025

Hao Wang, Ligong Han, Kai Xu, Akash Srivastava

arXiv preprint, 2025

Mustafa Eyceoz, Nikhil Shivakumar Nayak, Hao Wang, Ligong Han, Akash Srivastava

arXiv preprint, 2025

Aldo Pareja, Nikhil Shivakumar Nayak, Hao Wang, Krishnateja Killamsetty, Shivchander Sudalairaj, Wenlong Zhao, Seungwook Han, Abhishek Bhandwaldar, Guangxuan Xu, Kai Xu, Akash Srivastava, et al.

arXiv preprint, 2024

Diffusion Models & Generative Modeling

Xinxi Zhang, Song Wen, Ligong Han, Felix Juefei-Xu, Akash Srivastava, Junzhou Huang, Vladimir Pavlovic, Hao Wang, Molei Tao, Dimitris Metaxas

WACV, 2025

Xiaoxiao He, Quan Dao, Ligong Han, Song Wen, Minhao Bai, Di Liu, Han Zhang, Martin Renqiang Min, Felix Juefei-Xu, Chaowei Tan, Akash Srivastava, et al.

arXiv preprint, 2024

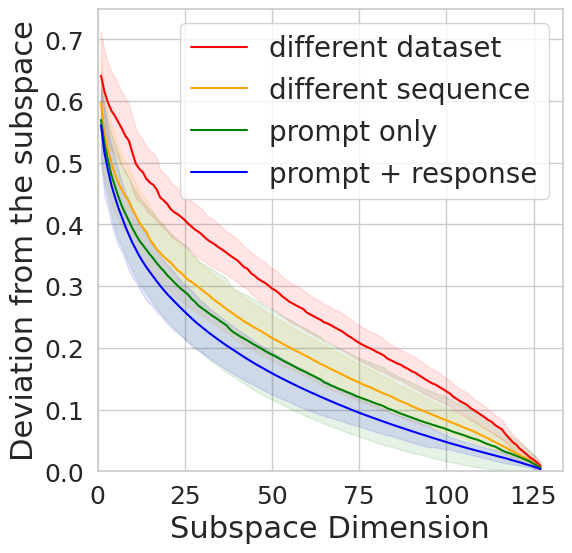

Giorgio Giannone, Akash Srivastava, Ole Winther, Faez Ahmed

NeurIPS, 2023

Synthetic Data, Privacy, & Density Ratio Estimation

Kaveh Alimohammadi, Hao Wang, Ojas Gulati, Akash Srivastava, Navid Azizan

arXiv preprint, 2024

Haoyuan Sun, Navid Azizan, Akash Srivastava, Hao Wang

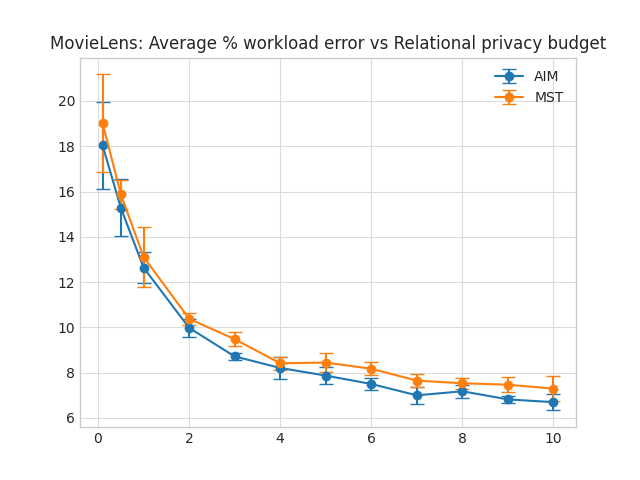

arXiv preprint, 2023

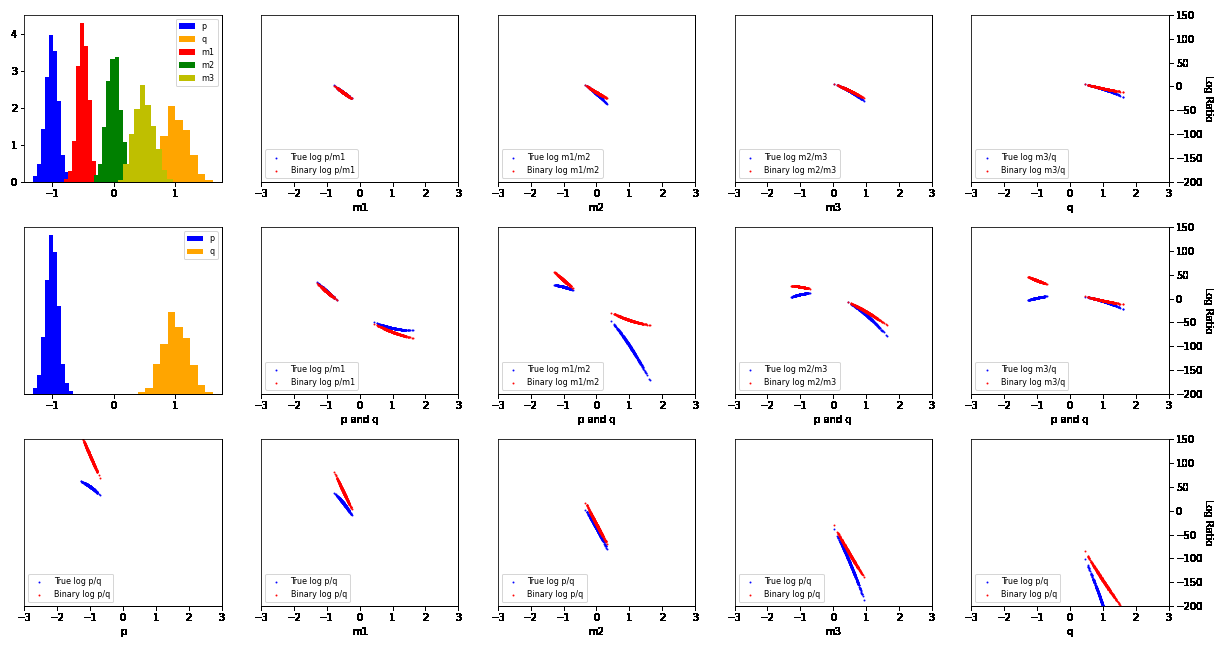

Akash Srivastava, Seungwook Han, Kai Xu, Benjamin Rhodes, Michael U. Gutmann

arXiv preprint, 2023

Akash Srivastava, Michael U. Gutmann, Kai Xu, Charles Sutton

ICLR, 2020

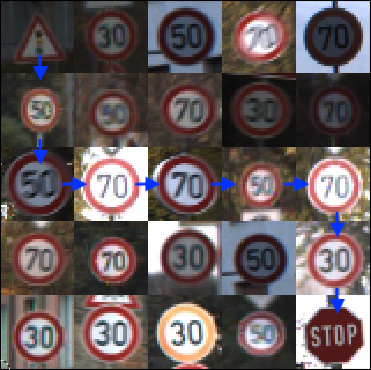

Red-Teaming, Robustness, & Evaluation

Zhang-Wei Hong, Idan Shenfeld, Tsun-Hsuan Wang, Yung-Sung Chuang, Aldo Pareja, James Glass, Akash Srivastava, Pulkit Agrawal

arXiv preprint, 2024

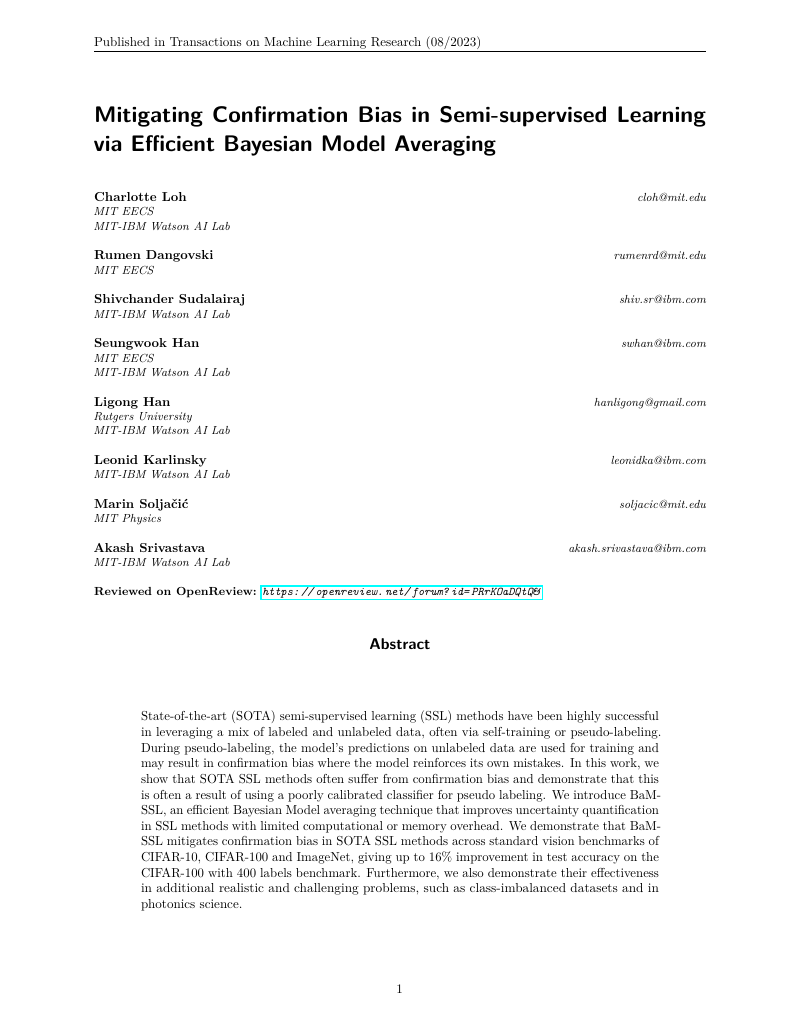

Charlotte Loh, Rumen Dangovski, Shivchander Sudalairaj, Seungwook Han, Ligong Han, Leonid Karlinsky, Marin Soljacic, Akash Srivastava

arXiv preprint, 2022

Charlotte Loh, Rumen Dangovski, Shivchander Sudalairaj, Seungwook Han, Ligong Han, Leonid Karlinsky, Marin Soljacic, Akash Srivastava

TMLR, 2023

Foundation Models for Planning, Design, & Systems

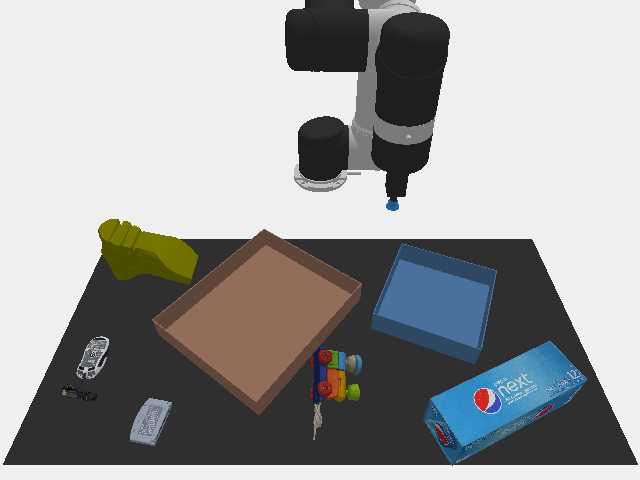

Anurag Ajay, Seungwook Han, Yilun Du, Shuang Li, Abhi Gupta, Tommi Jaakkola, Josh Tenenbaum, Leslie Kaelbling, Akash Srivastava, Pulkit Agrawal

NeurIPS, 2023

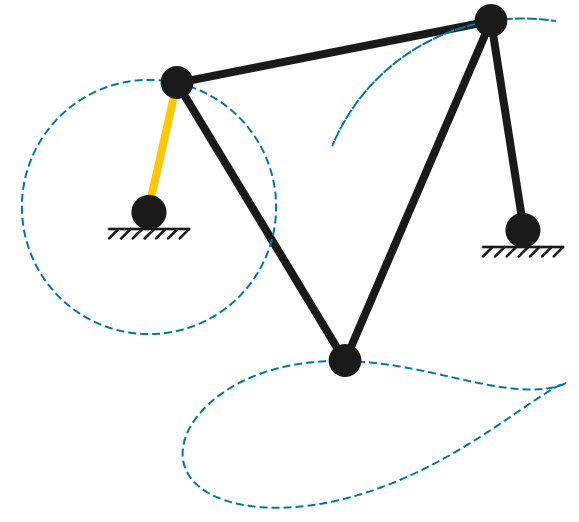

Amin Heyrani Nobari, Akash Srivastava, Dan Gutfreund, Kai Xu, Faez Ahmed

arXiv preprint, 2024

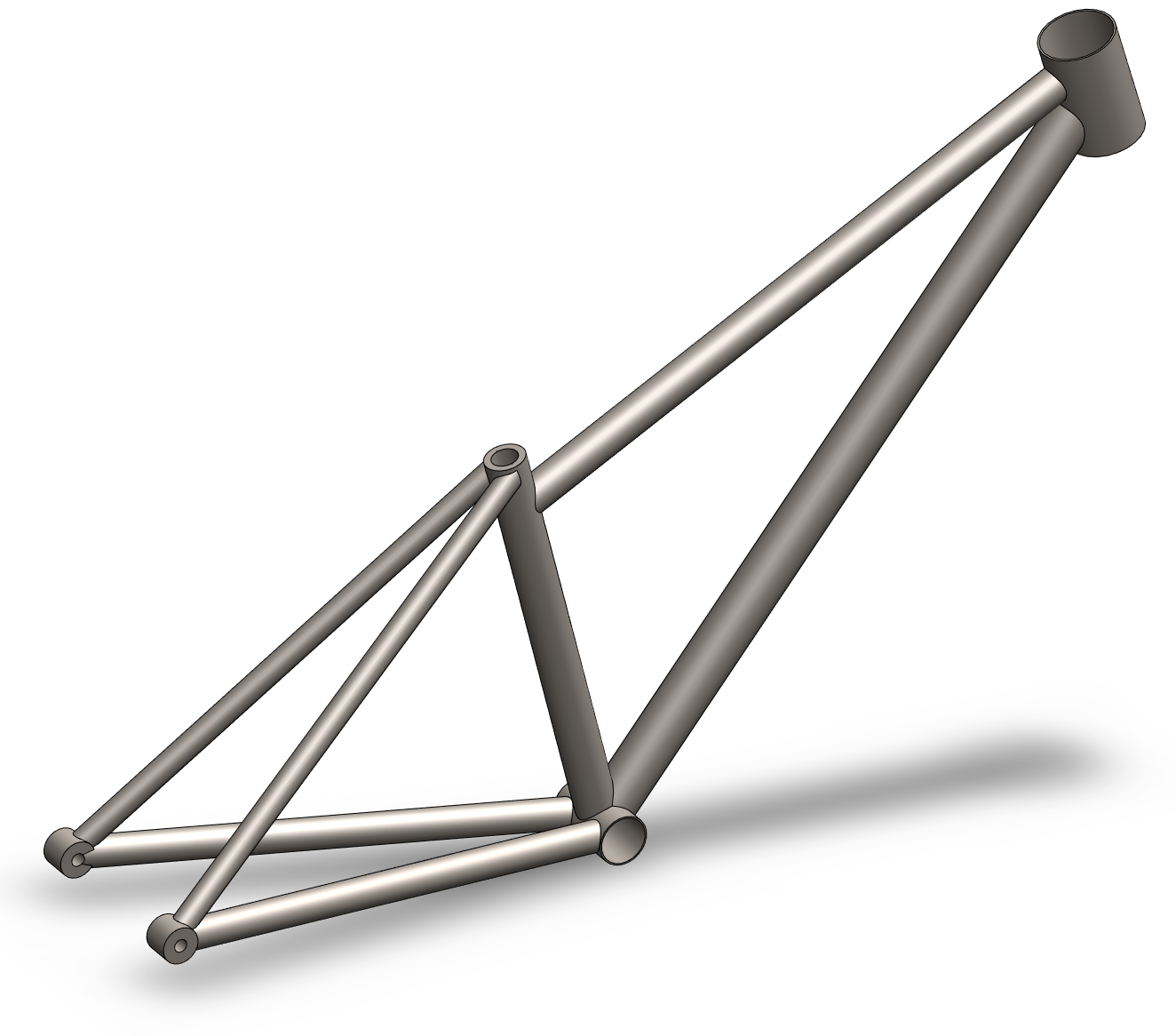

Lyle Regenwetter, Akash Srivastava, Dan Gutfreund, Faez Ahmed

Computer-Aided Design, 2023

Lifelong Learning, Program Synthesis, & Modularity

Lazar Valkov, Dipak Chaudhari, Akash Srivastava, Charles Sutton, Swarat Chaudhuri

NeurIPS, 2018

Lazar Valkov, Akash Srivastava, Swarat Chaudhuri, Charles Sutton

arXiv preprint, 2023

Lazar Valkov, Dipak Chaudhari, Akash Srivastava, Charles Sutton, Swarat Chaudhuri

arXiv preprint, 2018

Core Generative Modeling & Bayesian ML

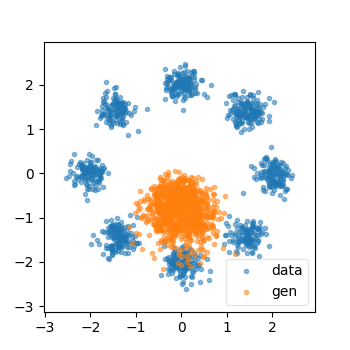

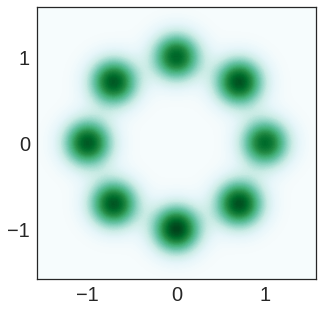

Akash Srivastava, Lazar Valkov, Chris Russell, Michael U. Gutmann, Charles Sutton

NeurIPS, 2017

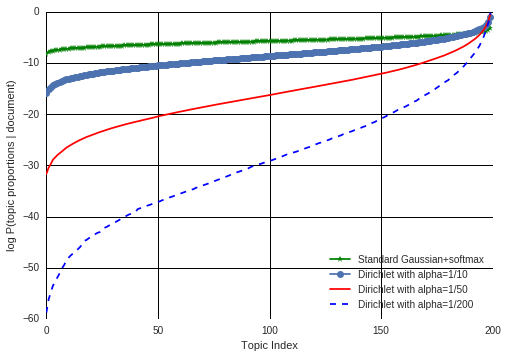

Akash Srivastava, Charles Sutton

ICLR, 2017

Mohammad Emtiyaz Khan, Didrik Nielsen, Voot Tangkaratt, Wu Lin, Yarin Gal, Akash Srivastava

ICML, 2018

Akash Srivastava

PhD Thesis, University of Edinburgh, 2019

Applied / Cross-Domain Modeling

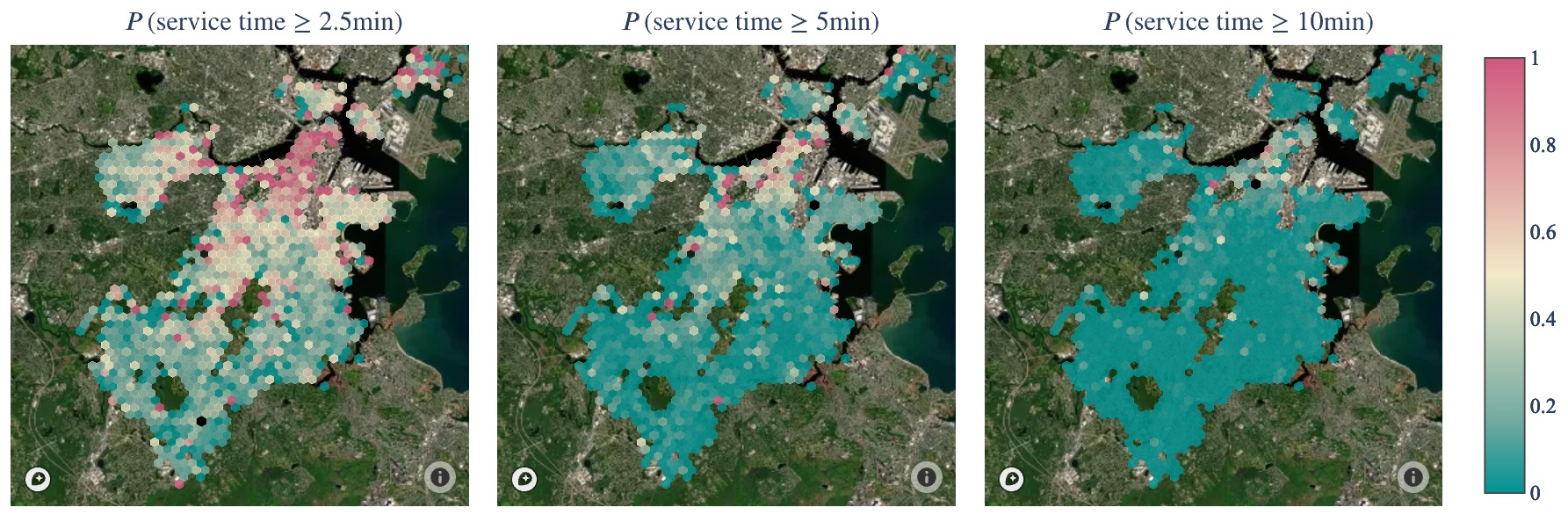

Samuel JK Chin, Matthias Winkenbach, Akash Srivastava

arXiv preprint, 2024

Maxwell Schrader, Navish Kumar, Esben Sorig, Soonmyeong Yoon, Akash Srivastava, Kai Xu, Maria Astefanoaei, Nicolas Collignon

arXiv preprint, 2024

For complete citation information and PDFs, visit my Google Scholar profile.